Connectors

Ingesting or exporting data from an IOTICSpace and its Digital Twins requires the creation of a software application through the IOTICS API, which in IOTICS we call Connector.

Introduction to Connectors

A Connector is a software application that interacts with Digital Twins in an IOTICSpace. It acts as the bridge between an IOTICSpace and the data source.

We distinguish between three different types:

1. Publisher Connector: imports data into an IOTICSpace

2. Follower Connector: exports data from an IOTICSpace

3. Synthesiser Connector: transforms existing data within an IOTICSpace

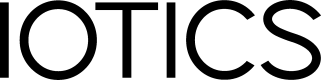

Publisher Connector

A Publisher Connector is a software application that works as a bridge between a data source and an IOTICSpace. It enables external data to be imported into an IOTICSpace and published by one or more Digital Twins, and therefore shared with and used by other Digital Twins in the ecosystem.

A Publisher Connector:

- Typically handles one single source of data, and is made up of one Twin Model with one or more related Twins from Model. If multiple sources have to be imported and synchronised, several Twin Models may have to be created, each with their specific metadata;

- Periodically imports and publishes new data through the Twins from Model. The data can be published either in batches or in single samples, and at fixed or variable intervals. Some Twins may also need to share data with a different frequency than others;

- Runs continuously. Once created, it is good practice to allow all the Twins in the IOTICSpace to maintain their state of consistency - be it actively sharing new data, staying idle or becoming obsolete and being deleted automatically.

Representation of a Publisher Connector managing three Twins

How to create a Publisher Connector with the IOTICS API

Implementing a Publisher Connector leads to the creation of a set of Twin Publishers that, simultaneously or in turn, publish data.

Have a look here to see an example on how to create a Publisher Connector.

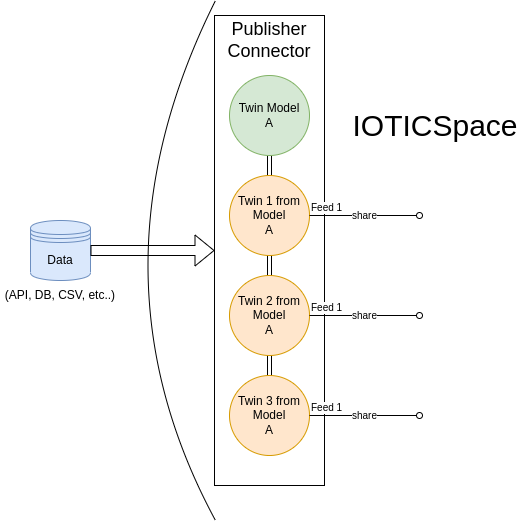

Follower Connector

The mirrored version of the Publisher Connector is the Follower Connector. It is an application that works as a bridge between an IOTICSpace and the data consumer and/or data storage.

A Follower Connector can run in the same or a different IOTICSpace than the Twin Publishers

Use remote search and remote follow if the IOTICSpaces are not the same.

Representation of a Follower Connector in the same Space as the Twin Publishers

How to create a Follower Connector with the IOTICS API

Similar to the Publisher Connector, a set of Twin Followers are needed in order to ask the Twin Publishers' feed for the last shared value.

Have a look here to see an example on how to create a Follower Connector that exports data into a Postgres DB.

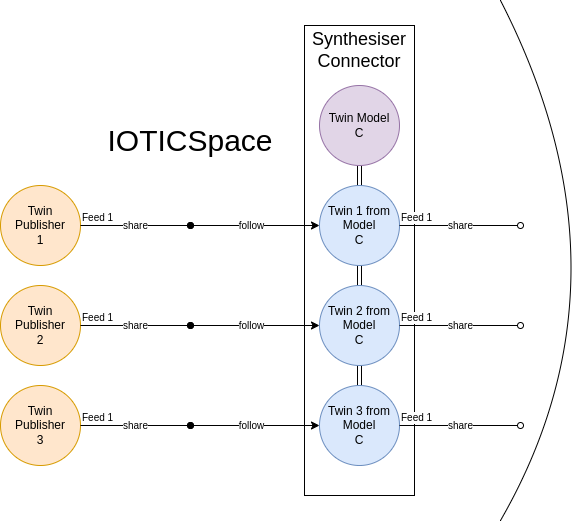

Synthesiser Connector

A Synthesiser Connector, or simply Synthesiser, is an application that continuously allows Follower Twins to receive, transform and send the data back into the IOTICSpace.

In other words, a Synthesiser creates a set of Twins that

- continuously follow Publisher Twins' feeds;

- "synthesise" by interoperating with the data;

- publish the new data back as a feed.

Representation of a Synthesiser Connector

How to create a Synthesiser Connector with the IOTICS API

A Synthesiser application creates a set of Follower Twins with one or more Feeds so that they both receive data and share the new data.

Updated 11 months ago