IOTICS Host Library

| Experience | Supported Languages | Identity Options | Protocol |

|---|---|---|---|

| Beginner | Python | Included | REST + STOMP (abstracted) |

Introduction

The IOTICS host library is designed to get you up and running as quickly as possible with easy to integrate Python wrappers for our Client Libraries. The HOST Library also includes the creation of Identity credentials as you go through the process to truly streamline the process.

Our host library provides a Python wrapper to abstract calls to our Python client libraries:

- iotic.web.rest.client

- iotic.web.stomp

- iotics-identity

We also provide REST and STOMP interfaces that can be used if using languages other than Python. See the IOTICS WebAPI section for more details.

By the end of of this section you will have created and run a fully functional example demonstrating the basics of publishing or receiving data to and from IOTICS.

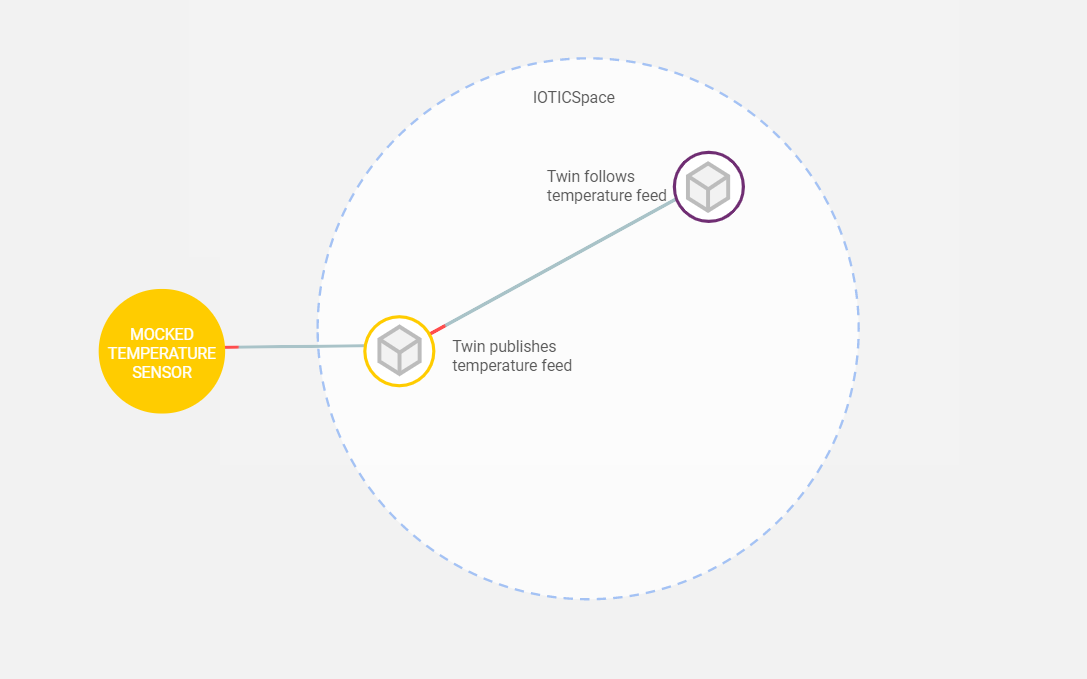

Lets imagine you have data that is kept separate from the rest of your system's architecture but is needed by multiple sources. If we create a twin that represents this data and publishes it in a feed that can be followed and consumed by followers you've created, you have suddenly enabled your system to start getting the data that it needs, when it needs it.

For this example we will create a searchable twin that represents and publishes the temperature of a physical location. We will then create a follower that is interested in the temperature of that location and will automatically search for your twin and start updating itself with that temperature every 5 minutes.

We will start by creating templates of publisher and follower connectors that you can use for future connectors of your own. Once the templates have been made you will use the publisher connector to create, populate and publish a digital twin, then use the follower connector to connect to the published twin and grab its data.

Prerequisites

Before starting with the IOTICS Host Library you will need:

- An IOTICS account

- Cookiecutter

- The IOTICS Host Library

- Python v3.8+ experience

If you do not have access to the IOTICS Host Library then please send an email to [email protected].

Creating Connectors templates

The first step is to create your publisher and follower connector templates using Cookiecutter.

Note:

Windows users need to remove

post_gen_project.pyfrom bothfollower_templateandpublisher_templatein the iotics-host-lib/builder/iotics/ directory before continuing.

From the iotics-host-lib root folder run the following command to create a Publisher template:

cookiecutter builder/iotics/publisher_template/

Then run the following command to create a Follower template:

cookiecutter builder/iotics/follower_template/

To generate the default example with sample code, press the Enter key for all options without providing any input.

Now that we've created the connector templates we can start using them to create and consume the data.

We will start with the Publisher template to create and publish your data, then the Follower template to consume the data.

Both templates also contain instructions in the generated README files in their respective folders.

Example template creation

$ cookiecutter builder/iotics/publisher_template/

project_name [A project name used in the doc (ex: Random Temperature Generator)]: Random Temperature Generator

publisher_dir [publisher_directory_name (ex: random-temp-pub)]: random-temp-pub

module_name [python module name (ex: randpub)]: randpub

command_name [command line name (ex: run-randpub)]: run-randpub

conf_env_var_prefix [conf environment variable prefix (ex: RANDPUB_)]: RANDPUB_

publisher_class_name [publisher class name (ex: RandomTempPublisher)]: RandomTempPublisher

Select add_example_code:

1 - YES

2 - NO

Choose from 1, 2 [1]: 1

This will create the following structure:

├── random-temp-pub

│ ├── Dockerfile

│ ├── Makefile

│ ├── randpub

│ │ ├── conf.py

│ │ ├── exceptions.py

│ │ ├── __init__.py

│ │ └── publisher.py

│ ├── README.md

│ ├── setup.cfg

│ ├── setup.py

│ ├── tests

│ │ └── unit

│ │ └── randpub

│ │ ├── conftest.py

│ │ ├── __init__.py

│ │ ├── test_conf.py

│ │ └── test_publisher.py

│ ├── tox.ini

│ └── VERSION

4 directories, 15 files

Building and running

Once you have created a Connector with the Cookiecutter you can start following the Publisher template tutorial to build and run it.

Monitoring and alerting

For metrics, the library uses Prometheus' official Python client. The following describes an example of how to monitor your component locally. After your components are set up to expose endpoints to scraping (see the Publisher template tutorial for more details), create a prometheus.yml config file:

# https://prometheus.io/docs/prometheus/latest/configuration/configuration/#configuration-file

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "rules.yml"

scrape_configs:

# Here it's Prometheus itself.

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9101']

- job_name: 'random-temp-pub'

static_configs:

- targets: ['localhost:8001']

- job_name: 'random-temp-fol'

static_configs:

- targets: ['localhost:8002']

Now you can start a local Prometheus server, for example.:

docker run --rm --name prometheus --network host -v"$(realpath ./prometheus.yml)":/prometheus/prometheus.yml prom/prometheus --web.listen-address=:9101

Note:

- if you remove

--rmflag you can persist the data after stopping the container and restart it with:docker start -a prometheus--network hostusage is not recommended on a production environment

(additional info);- if the default Prometheus port (

9090) is already being used, you can change it with the argument from the end:--web.listen-address=:9101but that will change the config file it uses from/etc/prometheus/prometheus.ymlto/prometheus/prometheus.yml.

Data visualisation

For data visualisation and export we recommend trying out Grafana's Docker image:

docker run --rm --name grafana --network host grafana/grafana

Note:

You should not use

--network hoston a production environment.

Visit http://localhost:3000/ and use admin as login and password, navigate to: "Configuration" ->

"Add data source" and "Select" the Prometheus (or click [here](http://localhost:3000/datasources new)).

In the "URL" field paste http://localhost:9101 and press "Save & Test", you should see: Data source is working if everything is set up correctly.

In the "Explore" view you can use for example the following queries to see Random Temperature Connectors:

- general metrics of Random Temperature Publisher and Follower (per-second rate):

rate(api_call_count{job=~"random-temp-.*"}[1m])

(link); - Random Temperature Publisher number of feed publishes that failed as measured over the last minute:

increase(api_call_count{job="random-temp-pub", function="share_feed_data", failed="True"}[1m])

(link).

OSX notes

The --network host flag is not supported on Docker Desktop for Mac.

Instead you can:

- Run prometheus: -

docker run -p 9101:9090 --rm --name prometheus -v"$(realpath ./prometheus.yml)":/prometheus/prometheus.yml prom/prometheus:latest --config.file=/prometheus/prometheus.yml - Run grafana: -

docker run --rm --name grafana -p 3000:3000 grafana/grafana - When configuring the prometheus datasource in grafana set the URL to

http://docker.for.mac.host.internal:9101 - Make the prometheus configuration file point to host using

docker.for.mac.host.internal

...

scrape_configs:

# Here it's Prometheus itself.

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: 'random-temp-pub'

static_configs:

- targets: ['docker.for.mac.host.internal:8001']

- job_name: 'random-temp-fol'

static_configs:

- targets: ['docker.for.mac.host.internal:8002']

Testing FAQ

- When running

make unit-testsin the top level of this repo you can get the errorAssertionError: assert 'Max retries exceeded with' in ''if you are running the iotics host locally. The solution is to runmake docker-clean-teston the local host and the tests should all pass.

Updated over 2 years ago

Start with the Publisher template tutorial to create and publish your data, then the Follower template tutorial to consume the data.